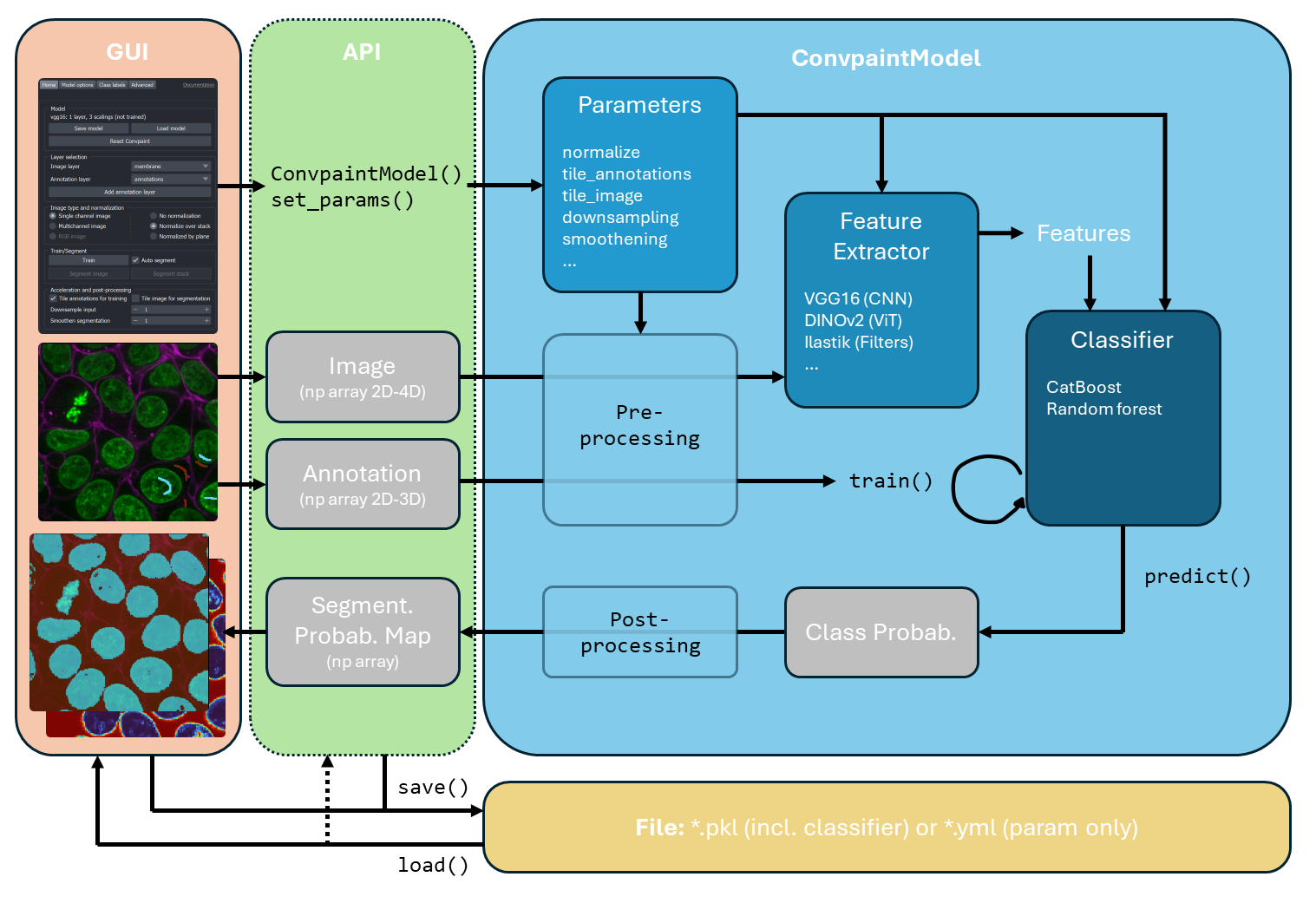

ConvpaintModel Class#

The ConvpaintModel class is the core of Convpaint and combines feature extraction with pixel classification.

It is the main class used for both the Convpaint napari plugin and the Convpaint API.

This setup allows for a similar workflow in both environments and lets the user transition between them seamlessly.

Each ConvpaintModel instance in essence consists of three components:

- a

feature extractormodel, defined in a separate class - a

classifier, which is responsible for the final pixel classification - a

Paramobject, which defines the details of the model procedures, and is also defined in a separate class

Note that the ConvpaintModel and its FeatureExtractor model are closely linked to each other. The intended way to

use them is to create a ConvpaintModel instance, which will in turn create the corresponding FeatureExtractor instance.

If a ConvpaintModel with another feature extractor is desired (including different configurations in layers or GPU usage),

a new ConvpaintModel instance should be created. Other parameters of the ConvpaintModel, though, can easily be changed later.

Input image dimensions and channels (convention across all training, prediction and feature extraction methods):

- 2D inputs: Will be treated as single-channel (gray-scale) images; if the feature extractor takes multiple input channels, the input will be repeated across channels.

- 3D inputs: Dependent on the

channel_modeparameter in theParamobject, the first dimension will either be treated as channels (ifchannel_modeis "multi" or "rgb") or as a stack of 2D images (ifchannel_mode="single"); in the "single" case, if the feature extractor takes multiple input channels, the single input channel will be repeated accordingly. - 4D inputs: Will be treated as a stack of multi-channel images, with the first dimension as channels.

__init__(alias=None, model_path=None, param=None, fe_name=None, **kwargs)

Initializes a Convpaint model. This can be done with an alias, a model path, a param object, or a feature extractor name. If initialized by FE name, also other parameters can be given to override the defaults of the FE model.

If neither an alias, a model path, a param object, nor a feature extractor name is given,

a default Convpaint model is created (defined in the get_default_params() method).

The four ways for initialization in detail:

- By providing an alias (e.g., "vgg-s", "dino", "gaussian", etc.), in which case a corresponding model configuration will be loaded.

- By providing a saved model path (model_path) to load a model defined earlier. This can be a .pkl file (holding the FE model, classifier and Param object) or .yml file (only defining the model parameters).

- By providing a Param object, which contains model parameters.

- By providing the name of the feature extractor and other parameters such as GPU usage, and feature extraction layers, in which case these kwargs will override the defaults of the feature extractor model.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

alias

|

str

|

Alias of a predefined model configuration |

None

|

model_path

|

str

|

Path to a saved model configuration |

None

|

param

|

Param

|

Param object with the model parameters (see Param class for details) |

None

|

fe_name

|

str

|

Name of the feature extractor model |

None

|

**kwargs

|

additional parameters

|

Additional parameters to override defaults for the model or feature extractor (see Param class for details) |

{}

|

Raises:

| Type | Description |

|---|---|

ValueError

|

If more than one of alias, model_path, param, or fe_name is provided. |

get_fe_models_types()

staticmethod

Returns a dictionary of all available feature extractors; names as keys and types as values.

Returns:

| Name | Type | Description |

|---|---|---|

models_dict |

dict

|

Dictionary of all available feature extractors; names as keys and types as values. |

get_default_params()

staticmethod

Returns a param object, which defines the default Convpaint model.

Returns:

| Name | Type | Description |

|---|---|---|

def_param |

Param

|

Param object with the default Convpaint model parameters. |

get_param(key)

Returns the value of the given parameter key.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

key

|

str

|

Key of the parameter to get |

required |

Returns:

| Name | Type | Description |

|---|---|---|

param_value |

any

|

Value of the parameter with the given key |

get_params()

Returns all parameters of the model in form of a copy of the Param object.

Returns:

| Name | Type | Description |

|---|---|---|

param |

Param

|

Copy of the Param object with all parameters of the model |

set_param(key, val, ignore_warnings=False)

Sets the value of the given parameter key.

Raises a warning if key FE parameters are set (intended only for initiation), unless ignore_warnings is set to True.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

key

|

str

|

Key of the parameter to set |

required |

val

|

any

|

Value to set the parameter to |

required |

ignore_warnings

|

bool

|

Whether to suppress the warning for setting FE parameters |

False

|

set_params(param=None, ignore_warnings=False, **kwargs)

Sets the parameters, given either as a Param object or as keyword arguments.

Note that the model is not reset and no new FE model is created. If fe_name, fe_use_gpu, and fe_layers change, you should create a new ConvpaintModel.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

param

|

Param

|

Param object with the parameters to set |

None

|

ignore_warnings

|

bool

|

Whether to suppress the warning for setting FE parameters |

False

|

**kwargs

|

parameters as keyword arguments

|

Parameters to set as keyword arguments (instead of a Param object) |

{}

|

Raises:

| Type | Description |

|---|---|

ValueError

|

If both a Param object and keyword arguments are provided. |

save(model_path, create_pkl=True, create_yml=True)

Saves the model to a pkl and/or yml file.

The pkl file includes the classifier and the param object, as well as the features and targets (when using memory_mode). The yml file includes only the parameters of the model.

Note: Loading a saved model is only intended at model initiation.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

model_path

|

str

|

Path to save the model to. |

required |

create_pkl

|

bool

|

Whether to create a pkl file |

True

|

create_yml

|

bool

|

Whether to create a yml file |

True

|

create_fe(name, use_gpu=None, layers=None)

staticmethod

Creates a feature extractor model based on the given parameters.

Importantly, the feature extractor model is returned, but not saved in the model. If you want to initialize a ConvpaintModel with this feature extractor, create a new instance of ConvpaintModel and specify the feature extractor parameters.

Distinguishes between different types of feature extractors such as Hookmodels and initializes them accordingly.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

name

|

str

|

Name of the feature extractor model |

required |

use_gpu

|

bool

|

Whether to use GPU for the feature extractor |

None

|

layers

|

list[str]

|

List of layer names to extract features from |

None

|

Returns:

| Name | Type | Description |

|---|---|---|

fe_model |

FeatureExtractor

|

The created feature extractor model |

get_fe_defaults()

Returns the default parameters of the feature extractor.

Where they are not sepecified by the feature extractor model, the default Convpaint params are used.

Returns:

| Name | Type | Description |

|---|---|---|

new_param |

Param

|

Convpaint model defaults adjusted to the feature extractor defaults |

get_fe_layer_keys()

Returns the keys of the feature extractor layers (None if the model uses no layers).

Returns:

| Name | Type | Description |

|---|---|---|

keys |

list[str] or None

|

List of keys of the feature extractor layers, or None if the model uses no layers |

train(image, annotations, memory_mode=False, img_ids=None, use_rf=False, allow_writing_files=False, in_channels=None, skip_norm=False)

Trains the Convpaint model's classifier given images and annotations.

Uses the FeatureExtractor model to extract features according to the criteria specified in the Param object. Then uses the features of annotated pixels to train the classifier.

Trains the internal classifier, which is also returned.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

image

|

ndarray or list[ndarray]

|

Image to train the classifier on or list of images |

required |

annotations

|

ndarray or list[ndarray]

|

Annotations to train the classifier on or list of annotations. Image and annotation lists must correspond to each other. |

required |

memory_mode

|

bool

|

Whether to use memory mode. If True, the annotations are registered and updated, and only features for new pixels are extracted. |

False

|

img_ids

|

str or list[str]

|

Image IDs to register the annotations with (when using memory_mode) |

None

|

use_rf

|

bool

|

Whether to use a RandomForestClassifier instead of a CatBoostClassifier |

False

|

allow_writing_files

|

bool

|

Whether to allow writing files for the CatBoostClassifier |

False

|

in_channels

|

list[int]

|

List of channels to use for training |

None

|

skip_norm

|

bool

|

Whether to skip normalization of the images before training.

If True, the images are not normalized according to the parameter |

False

|

Returns:

| Type | Description |

|---|---|

clf : CatBoostClassifier or RandomForestClassifier

|

Trained classifier (also saved inside the model instance) |

segment(image, in_channels=None, skip_norm=False, use_dask=False)

Segments images by predicting the most probable class of each pixel using the trained classifier.

Uses the feature extractor model to extract features from the image. Predicts the classes of the pixels in the image using the trained classifier.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

image

|

ndarray or list[ndarray]

|

Image to segment or list of images |

required |

in_channels

|

list[int]

|

List of channels to use for segmentation |

None

|

skip_norm

|

bool

|

Whether to skip normalization of the images before segmentation.

If True, the images are not normalized according to the parameter |

False

|

use_dask

|

bool

|

Whether to use dask for parallel processing |

False

|

Returns:

| Name | Type | Description |

|---|---|---|

seg |

ndarray or list[ndarray]

|

Segmented image or list of segmented images (according to the input) Dimensions are equal to the input image(s) without the channel dimension |

predict_probas(image, in_channels=None, skip_norm=False, use_dask=False)

Predicts the probabilities of the classes of the pixels in an image using the trained classifier.

Uses the feature extractor model to extract features from the image. Estimates the probability of each class based on the features and the trained classifier.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

image

|

ndarray or list[ndarray]

|

Image to predict probabilities for or list of images |

required |

in_channels

|

list[int]

|

List of channels to use for prediction |

None

|

skip_norm

|

bool

|

Whether to skip normalization of the images before prediction.

If True, the images are not normalized according to the parameter |

False

|

use_dask

|

bool

|

Whether to use dask for parallel processing |

False

|

Returns:

| Name | Type | Description |

|---|---|---|

probas |

ndarray or list[ndarray]

|

Predicted probabilities of the classes of the pixels in the image or list of images Dimensions are equal to the input image(s) without the channel dimension, with the class dimension added first |

get_feature_image(data, memory_mode, img_ids, in_channels=None, skip_norm=False)

Returns the feature images extracted by the feature extractor model.

For details, see the _extract_features method.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

data

|

ndarray or list[ndarray]

|

Image(s) to extract features from |

required |

memory_mode

|

bool

|

Whether to use memory mode. If True, the annotations are registered and updated, and only features for new pixels are extracted. |

required |

img_ids

|

str or list[str]

|

Image IDs to register the annotations with (when using memory_mode) |

required |

in_channels

|

list[int]

|

List of channels to use for feature extraction |

None

|

skip_norm

|

bool

|

Whether to skip normalization of the images before feature extraction.

If True, the images are not normalized according to the parameter |

False

|

Returns:

| Name | Type | Description |

|---|---|---|

features |

ndarray or list[ndarray]

|

Extracted features of the image(s) or list of features for each image if input is a list. Reshaped to the input imges' shapes. Features dimension is added first. |

reset_classifier()

Resets the classifier of the model.

- Resets the classifier object to None

- Resets possible path to the classifier saved in the Param object

- Resets the training features used with memory_mode

reset_training()

Resets the features and targets of the model which are used to iteratively train the model with the option memory_mode.